How to Build Fat Jar

The method varies depending on the type of build tool you are using.

For Maven, you should use the maven-assembly-plugin,

while for Gradle, you need to use the Shadow Plugin.

In most cases, using one of these two plugins will solve the problem. However, Spring Boot requires additional handling due to its different execution method.

Boot-Jar

This is the officially recommended approach in Spring.

According to the explanation, Java does not provide a method to load nested JAR files, which can be a problem in environments where you need to run without extracting them.

Therefore, you should use a Shaded Jar (Fat Jar). It collects all classes from all JAR files, packages them into a single JAR file.

However, as it becomes challenging to see the libraries that exist in the actual application, Spring provides an alternative method.

If you’re curious about how this is implemented in detail, please refer to the official documentation.

Configuration

1. Adding Modules

implementation 'org.springframework.boot:spring-boot-loader'

The mentioned modules help in creating Spring Boot as an Executable Jar or War.

2. Modify Manifest

Because it should be executed through JarLauncher, Manifest needs to be modified.

The contents should exist in META-INF/MANIFEST.MF and should be recorded during the build.

The official documentation provides the following guidance:

|

|

In my case, I applied it as follows:

|

|

Issues

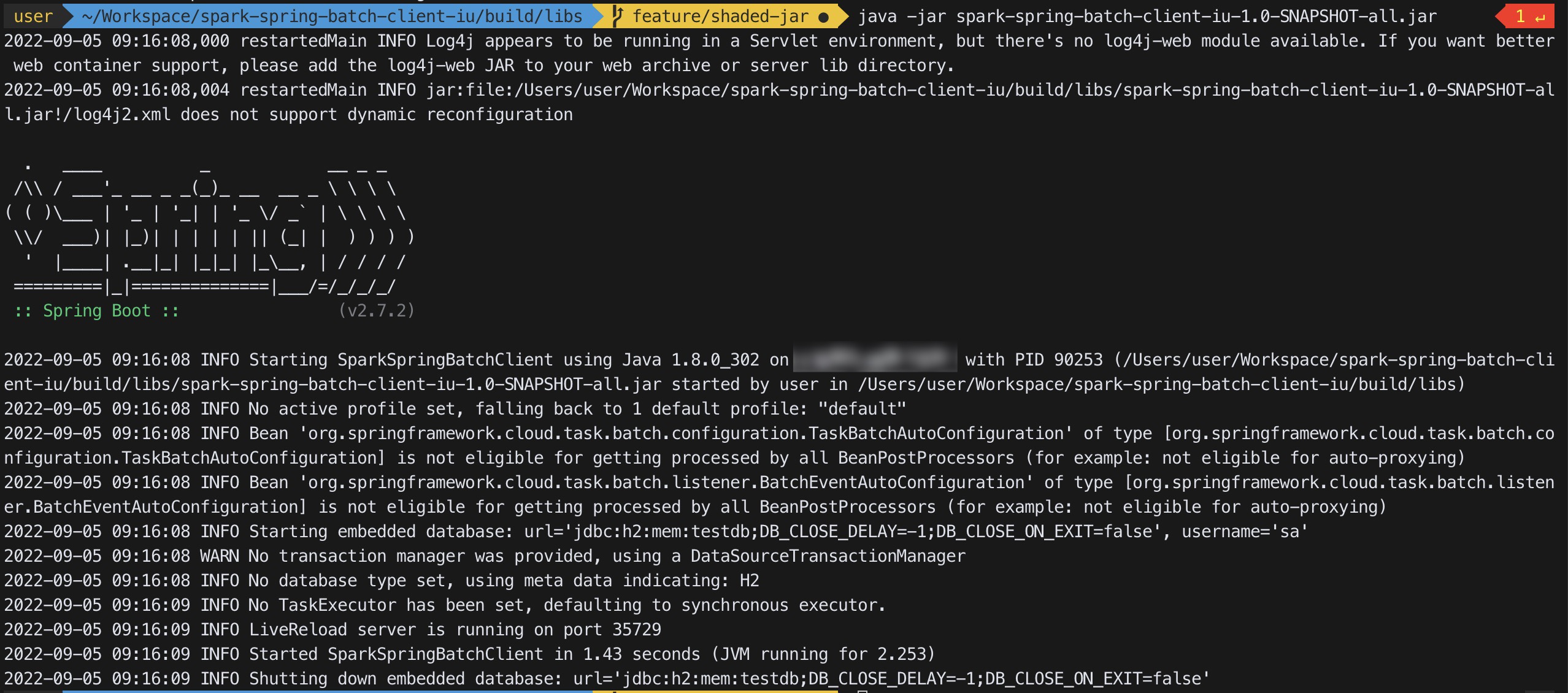

When executed using the java -jar xxx.jar command, it works as expected.

However, problems occur when running it within Spark.

The issue stemmed from a dependency version mismatch.

At my current workplace, we’re running Spark version 2.4, and the bundled Gson version was older than what the application required.

Consequently, the application failed to run due to the utilization of the outdated Gson library.

I explored options for forcibly using the latest version of Gson.

The initial approach involved specifying the dependency version to use.

(For your information, we are already using a relatively up-to-date Gson version without employing this method.)

However, it didn’t work due to Spark’s preference for its embedded libraries.

The second method is outlined in the official Spark documentation.

spark.driver.userClassPathFirst

spark.executor.userClassPathFirst

Both of these variables have a default value of False.

The documentation specifies that the user-provided --jars option is given higher priority.

This can result in added complexity, particularly when managing an increasing number of jars, and it might introduce issues with experimental functionality.

Since it didn’t deliver the intended behavior, and it wasn’t our original intent, we have ceased its usage.

To resolve this issue, you should utilize relocation (renaming) of dependencies, which is supported by the Shadow Plugin.

Shadow Plugin

It consolidates the project’s dependency classes and resources into a single JAR.

This offers two distinct advantages:

- It generates a deployable executable JAR.

- It bundles and relocates common dependencies from the library to prevent classpath conflicts.

Referring to a more comprehensive description of the benefits would be beneficial(written in korean).

Add Option For Spring

To build a Spring Application with the Shadow Plugin, you need additional options.

The following content is being added.

|

|

Detailed information can be found in the Git Issue.

Relocating Packages

This is a feature used when duplicate dependencies exist in the classpath, preventing the intended version from being used. It is a common occurrence in Hadoop and Spark.

This idea is simple. It involves identifying conflicting dependencies within the project and then changing their names (paths).

This prevents issues from arising since different named dependencies are used.

The implementation method is simple.

|

|

Change junit.framework to shadow.junit and apply it as follows, as needed.

|

|

If you wish to examine relocation in the Shadow Plugin, please refer to the link.

Modify the classpath.

In reality, I had hoped that everything would run smoothly up to this point.

However, when I attempt to execute java -jar project-all.jar, I can detect an anomaly.

Although Spring itself started, I observed that the Main Application did not execute.

When I investigated, the root cause was not immediately apparent.

Fortunately, through a process of trial and error, I was able to identify the issue, and it was related to the following link

At first, it was built with only the runtimeClassPath by default, but when I included the compileClassPath as follows, the problem was resolved.

|

|

As problem resolution was the primary focus, I didn’t investigate why including compileClassPath was necessary for it to function correctly.

Conclusion

I’ve explored how to build a Fat Jar (Shaded Jar) when using Spring.

While Boot Jar is the recommended method by Spring and offers the advantage of simplicity, it can be challenging to resolve Dependency conflicts in situations like using a Hadoop cluster.

The usage of the Shadow Plugin is a commonly employed method.

However, when applying it to Spring, you will need to write additional scripts. Nevertheless, this approach can help avoid the problems experienced with Boot Jar.

When dealing with Spark or Hadoop clusters, it is advisable to use the Shadow Plugin.

However, if you do not encounter these issues, it is recommended to use it based on your specific circumstances.

Reference

- Spring Official Guide : https://docs.spring.io/spring-boot/docs/current/reference/html/executable-jar.html#appendix.executable-jar.alternatives

- offical document translated in korean Article : https://wordbe.tistory.com/entry/Spring-Boot-2-Executable-JAR-스프링-부트-실행

- Spring fat jar Git Issue for shadow plugin : https://github.com/spring-projects/spring-boot/issues/1828#issuecomment-231104288

- StackOverflow, which was helpful for gaining an overall understanding : https://stackoverflow.com/questions/51206959/classnotfound-when-submit-a-spring-boot-fat-jar-to-spark